Stem Separation - How AI Has Found It's Way Into Music Production

For quite some time, AI had kept it’s grubby little hands out of the music production world. Now, a good percentage of the plugins (a plugin is a piece of software you can “plug in” to an audio track to add effects or generate audio) I see are advertised as “using AI”. From reverb removers (yes, that’s right, you can now remove the reverb from an audio recording), to EQ analysers. Today we’ll focus on stem separation.

What is a stem?

I’m approaching this blog as more of an introduction to stem separation. There might be a follow-up with more technical details later on, but plenty of articles already cover the details in depth.

Before we can separate stems, we need to know what a stem is.

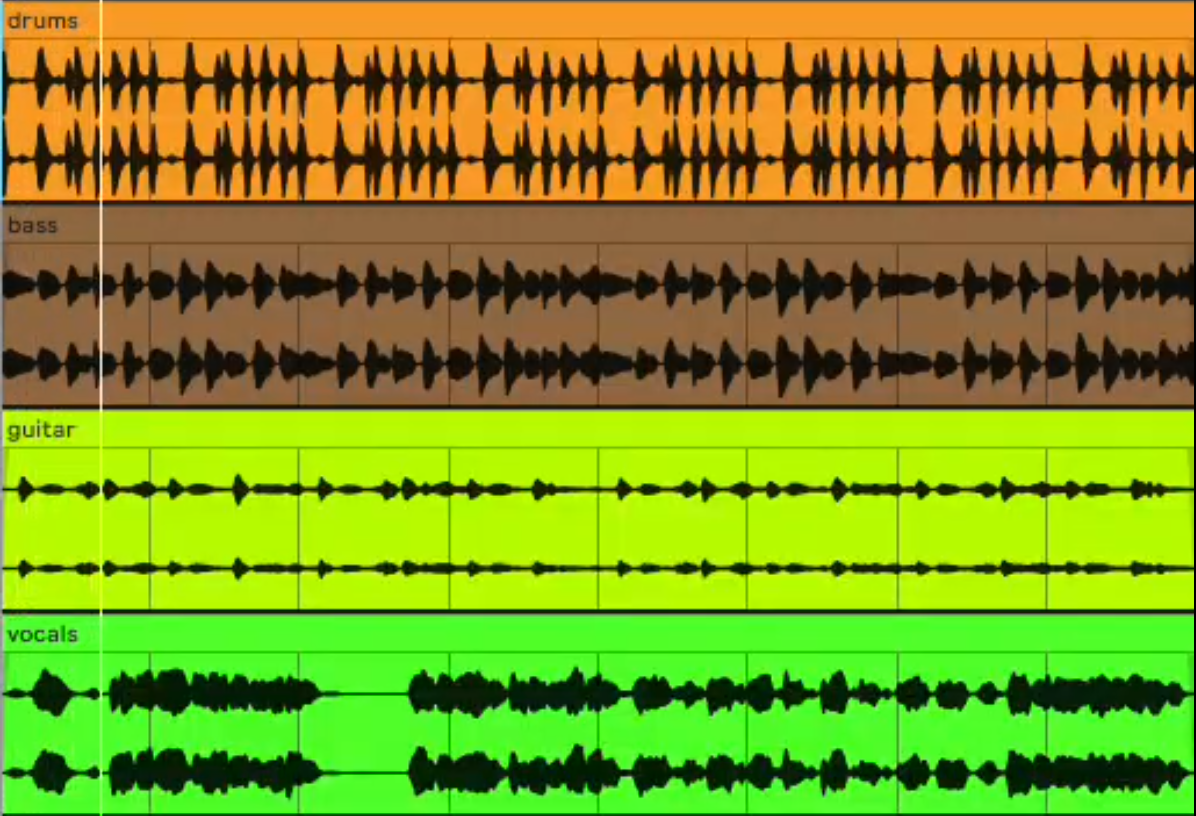

Every song that you or I listen two will likely contain multiple instruments/elements. A classic band line up might have a drummer, bassist, guitarist and singer. Orchestras can have up to 60 musicians! Nowadays, the vast majority of songs are produced in a Digital Audio Workstation (DAW) in which the number of tracks you can have is really only limited by the power of your computer.

A stem is an audio file from one of the above set ups that represents groups of audio tracks that have been recorded for a song. There could be a vocal stem, containing all lead and background vocals combined into one audio track or a drums stem with the kick, snare, hi-hats, etc mixed together.

What is stem separation?

Take a piece of cake. What if I wanted to return it into it’s constituent parts of egg, flour, sugar etc? Well, I can’t. With stem separation, we can take an audio file containing several stems, and separating it up into several audio files - one for each stem. Phew, I can get my eggs back!

Why is this useful?

Stem separation is useful because it unlocks creative, educational, and professional possibilities from a mixed audio track - even when the original session files are unavailable.

There are some legitimate legal uses of stem separation. The best one that comes to mind is the last ever Beatles song, Now And Then. AI was used to extract John Lennon’s vocals from an old demo, and then, Paul McCartney / Ringo Star turned it into the last ever Beatles record.

On the other hand, stem separation gives almost anyone with an internet connection the ability to access the stems of virtually any song - offering music producers a treasure trove of isolated vocals (and lawsuits).

What’s behind the magic?

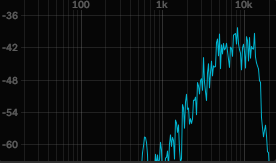

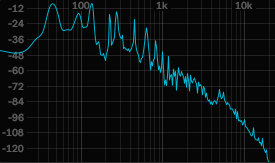

Machine learning models. Think of it this way - every instrument makes a sound that usually has a fairly identifiable pattern on a spectrogram. The main body of a hi-hat lies around 10-15khz whilst the energy of a bass guitar lies anywhere between 50 - 200hz. Sure, two different hi-hats will have difference waveforms and frequencies but the general pattern is the same.

Frequency graph of a hi-hat.

Frequency graph of a bass guitar.

These models are trained to understand frequency data of songs where the stems are available. Once we know that, we can apply filters to pick and choose which frequencies we want to keep from the original song.

Of course, it’s a bit more complicated than that. For more technical details you can head to this article which focuses on the model behind music.ai’s stem separation (music.ai claim to have the best model).

How accurate is it?

Like any models, to measure it’s accuracy you have to have training data where you have the original stems.

Once the stems are separated, accuracy evaluation is done using SDR - Signal-to-Distortion Ratio. This is basically a measure of how much distortion / artefacts have been introduced during the separation process compared to the original stem. 100% is perfect, 0% is nope!

Anyway, I’ll leave the SDR calculation til the next blog. To test it’s accuracy, why don’t we actually split some stems?

An example

Let’s take this 8 bar loop consisting of drums, bass, guitar and vocal samples that I put together (all royalty free, of course).

I’m using the inbuilt stem splitter from Logic Pro, the native DAW to Mac OS. Generally considered to be lacking compared to other tools such as music.ai, or lalal.ai. But it’s good for an example!

It takes maybe 4 seconds to run an audio clip of this size through the stem splitter, and this is the result

You can clearly hear the distortion and artefacts that have been introduced into each clip. We’re still at the stage where stem separation algorithms struggle with music that has lots of hard transients (i.e. drums) or lots of components that share the same frequency range. It’s easy to hear the audio ducking in the vocals, bass and guitar when the drums are hitting and it has struggled quite badly on the guitar.